Every game will encounter performance issues at some point, and will need to be optimized. But how do you optimize a game? Sure, there are some general guidelines like “don’t use too many draw calls,” etc, but those might not apply to all situations. In order to pinpoint the bottlenecks in your game, you need to do some profiling.

The engineering team here at Funovus recently had to do some performance optimization for one of our games, Wild Castle, and I will talk about some of the processes that we used to identify what our issues were.

Commenting Code Out

At one point or another, we’ve all used the commenting code method of figuring out what an issue is: disable a block of code, and then see how your program behaves (or in our case, measure the performance).

For Wild Castle, we have a custom rendering manager that takes care of batching our units and sending instanced draw calls to the GPU in order to save draw calls. The basic structure of our rendering code is as follows:

struct InstancedProperties {

// per-unit data, like unitColor, animationState, etc

}

InstancedProperties[] unitData;

void render() {

//...

for (int i = 0; i < unitMaterials.Length; i++) {

Graphics.DrawInstanced(/* ... */, unitData)

}

//...

}

In this code, unitMaterials is a collection of passes that we do. We prepared a debug build that let us skip certain passes in this collection. After measuring the results before and after disabling certain passes, we noticed that the outline pass was very costly, even though most units don’t have any outline. Upon some investigation, we found the following vertex shader code in our outline shader:

half hasOutline; // Instanced property

v2f vert(input IN) {

//...

float3 outlinePos = // compute outline vertex position

position = outlinePos * hasOutline;

//...

}

It turns out that we are using our existing code structure to manage outlines, similar to how we manage other unit properties like color, animation frames, etc. So we are actually using the shader code to decide whether the outline should be rendered, and if we don’t need to render the outline, we multiply the final vertex by 0. While this works, it results in a lot of wasted work on the GPU, since we are doing a bunch of processing for something that doesn’t appear on screen.

Estimating Your Gain

One thing that I’d like to emphasize more is understanding how much of an impact an optimization can provide. There are a ton of things that can be optimized, all of which take different amounts of effort and provide various amounts of improvement. So how do we prioritize which features to optimize?

In order to understand this better, we need to measure the upper bound for what we can gain for each optimization. An easy way of doing this is just disabling the feature and comparing the before and after results, which we have already done when disabling specific rendering passes earlier. This might seem obvious and unnecessary to mention, but understanding the impact of some particular optimization is extremely important. If we spend a ton of effort to optimize some code, but it turns out to give us very little gain, that would be a ton of wasted effort.

For the case of our outlines, the optimization would require a rework of how we handle per-unit data, which is quite costly. However, from our measurements, we know that the unit rendering passes cost the most. In particular, the outline pass (which mostly renders nothing), costs almost as much as the main unit rendering pass! After we restructured our instancing manager to prevent rendering outline passes for units with no outlines, we see around a 30% decrease in vertex shaded per second, which led to a pretty significant improvement in FPS on our target device.

Using Profiling Tools

For one of our other optimizations, we noticed the issue after using a profiling tool. By adding some annotations to our instancing manager, it’s possible to record when each of our draw calls is made:

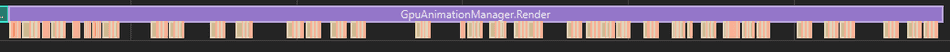

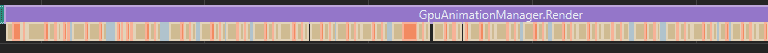

In this image, the orange and tan regions show the time when we are doing real work, while the purple bar labeled GpuAnimationManager.Render is the time that we spend in our main rendering function. Clearly, there’s a ton of gaps in between the time that we do our useful work, so what is going on here?

For reference, here is a more complete structure of our rendering code:

// Update is called every frame

void Update() {

//...

// Start of the purple block

Annotater.StartRecordingCodeBlock("GpuAnimationManager.Render");

render();

// End of the purple block

Annotater.EndRecordingCodeBlock();

//...

}

void render() {

foreach (var unitType in allUnits) {

foreach (var effect in unitType.meshEffects) {

if (!effect.isUsed) {

continue;

}

// Start of the tan/orange blocks

Annotater.StartRecordingCodeBlock("Rendering");

var unitMaterials = effect.Materials;

var unitData = // Get units that are using the effect

for (int i = 0; i < unitMaterials.Length; i++) {

Graphics.DrawInstanced(/* ... */, unitData)

}

//...

// End of the orange/tan blocks

Annotater.EndRecordingCodeBlock();

}

}

}

One thing to note is that we have a concept called mesh effects. A mesh effect is essentially a material swap that gives certain units special effects (for example, a burning/frozen/poisoned/etc effect). We have a total of 27 different mesh effects, and usually each unit only has one active at a time.

Due to our instancing structure, we loop through all of the mesh effects, see which units are using them (if any), and then render the units accordingly. This seems pretty reasonable at first, but after some more investigation, we found that iterating through all the mesh effects was actually the culprit behind the gaps in the timeline!

After refactoring our code to track the used mesh effects more efficiently, we were able to eliminate most of the gaps, which resulted in saving around 1.6ms on the CPU. That’s around 10% of our 16.67ms per frame budget that was previously being wasted!

Final Takeaways

Every game has different needs, and it is impossible to give a one-size fits all solution to optimizing your game’s performance. It can be daunting at first, but there is a methodical approach that you can use to see what’s going on. Understanding how to measure the impact of various features will help point you in the right direction when you need to optimize your own game.

Because we’re creating a flexible game engine that powers our whole library of games, our optimization efforts can’t be tailored to one specific game or application. This makes our optimization even more challenging and satisfying to solve. If these types of engineering challenges are appealing to you, check out our open engineering positions at https://www.funovus.com/careers.

What's coming next?

In the next post, we'll do a deep dive on one of the most successful match-3 games out there.

Continue to Royal Match Dominates Match-3: What Can ALL Designers Learn?.